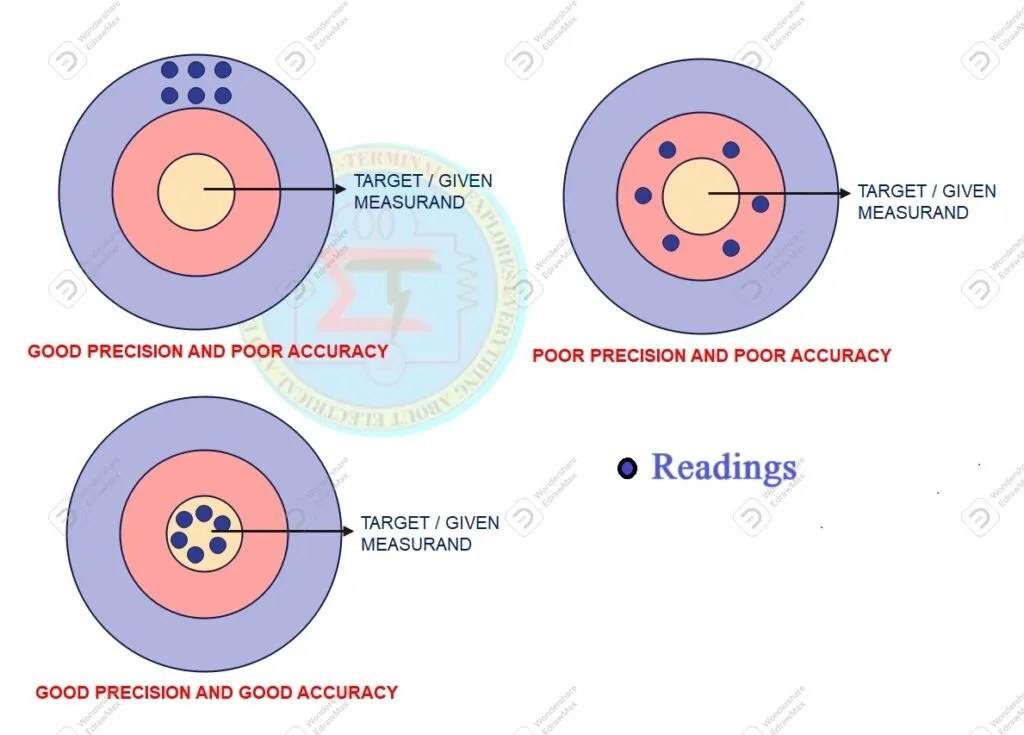

Instrumentation is known as very important area in engineering field. In every process , output must be compared with reference value. To do so, many time of instruments used for it. It is known as accuracy and precision in the instrumentation are more important characteristics in an measurement. Because these characteristics are defines , measurement or how close to the reference value. measurement is nothing, it is a output of a process.

In this article accuracy and precision in the instrumentation are explained well. Before that look in to below figure for better understanding about precision and accuracy

Accuracy

Definition of Accuracy

It is defined as closeness of the instrument output to the true value of the measured quantity as per standards.

It can be detected during calibration of an instrument with its higher standard instrument ( It is also known as reference instrument). Any deviation in accuracy happens due to its mechanical , electrical input, environmental conditions. Accuracy always expressed in percentage

Example

An ammeter used to measure current having full scale deflection (FSD) is 100 A with 2% accuracy.

When it reads its shows 50A , actual reading may be in between 49A to 51 A

As same as it reads 100A , then actual reading may be in between 98 A to 102 A

Accuracy of an instrument can be improved during calibration

Factors affecting instrument’s accuracy

- Thermal expansion : When instrument used as long time , its internal parts starts to expands.

- Location : An instrument must suitable for geometrical location as well as area of application

- Environmental condition: Environmental conditions are severely affects accuracy

- Error in scale: Scale should be aligned with deflecting torque generated in an instrument

Precision

Definition

It can be defined as ability of an instrument to reproduce certain set of readings with a given accuracy

Precision of an instrument is depends on its repeatability

Repeatability is defined as ability of instrument to reproduce when same measurand (input quantity) applied to it under same direction and same condition

It must be noted that precision of an instrument must be within accuracy

Let us consider same example shown in accuracy

An ammeter with 100A FSD with 2% accuracy. So its reading always with 98A to 100 A in full scale deflection

Look in below table for better standing of accuracy and precision

| Measurand (Input Quantity) | Instrument reading | Precision |

|---|---|---|

| 50 A | 47A | 47.1 |

| 46.5 | ||

| 47.3 | ||

| 46.9 | ||

| 47 |

| Measurand (Input Quantity) | Instrument reading | Precision |

|---|---|---|

| 50 A | 47A | 47.9 |

| 46.1 | ||

| 50.3 | ||

| 51 | ||

| 49 |

| Measurand (Input Quantity) | Instrument reading | Precision |

|---|---|---|

| 50 A | 49.5A | 49.6 |

| 49.8 | ||

| 49.4 | ||

| 49.3 | ||

| 49.7 |

Factors affecting precision of an instrument

- Human error : Observer must have knowledge to read properly

- Environmental condition: Temperature , humidity plays vital role for instrument performance

- Installation condition of instruments: Instrument must be installed such that user can read it with minimal effort.

Difference between accuracy and precision

| Accuracy | Precision |

|---|---|

| It is closeness of true value of input quantity | It depends on repeatability and reproducibility |

| Accuracy can be improved during calibration | Precision cannot be improved. Proper care must be taken while handling instrument to measure precise value |

| Accuracy is depends on systematic error | It is depends on random error |

| Accuracy is most important in measurement | Precision also is most important and it should be withing accuracy. |

| Accuracy is not sufficient condition for precision | Precision is not sufficient condition of accuracy |